Figure 17.1 Code dealing with time is complex because time itself is complex.

serde time module and chrono craterayon crateanyhow and thiserror crateslazy_static and once_cell crates This chapter is sort of a cookbook of some of the most popular external crates. These crates are so common that you can almost think of them as extensions of the standard library—they’re not just random crates sitting around that nobody uses. Learning just these few crates will allow you to turn data like JSON into Rust structs, work with time and time zones, handle errors with less code, speed up your code, and work with global statics.

You’ll also learn about blanket trait implementations, which are extremely fun. With those, you can give your trait methods to other people’s types even if they didn’t ask for them!

The serde crate is an extremely popular crate that lets you convert to and from formats like JSON, YAML, and so on. In fact, it’s so popular that it’s rare to find a Rust programmer who has never heard of it.

JSON is one of the most common ways to send requests and receive information online, and it’s is pretty simple, being made up of keys and values. Here is what it looks like:

{

"name":"BillyTheUser",

"id":6876

}

[

{

"name":"BobbyTheUser",

"id":6877

},

{

"name":"BillyTheUser",

"id":6876

}

]

So how do you turn something like "name": "BillyTheUser" into a Rust type of your own? JSON only has seven(!) data types, while Rust has a nearly unlimited number. In your own data type in Rust, you might want "BillyTheUser" to be a String, a &str, a Cow, your own type such as a UserName(String), or almost anything else. Doing this conversion between Rust and other formats like JSON is what serde is for.

The most common way to use it is by creating a struct with serde’s Serialize and/or Deserialize attributes on top. To work with the data from the previous example, we could make a struct like this with serde’s attributes to let us convert between Rust and JSON:

use serde::{Serialize, Deserialize};

#[derive(Serialize, Deserialize, Debug)]

struct User {

name: String,

id: u32,

}

Serialize is used to turn your Rust type into another format like JSON, while Deserialize is the other way around: it’s the trait to turn another format into a Rust type. That’s also where the name comes from: “Ser” from serialize and “De” from deserialize make the name serde. If you are curious about how serde does this, take a look at the page on the Serde data model at https://serde.rs/data-model.html.

If you are using JSON, you will also need to use the serde_json crate; for YAML, you will need serde_yaml, and so on. Each of these crates works on top of the serde data model for its own separate data format.

Here’s a really simple example where we imagine that we have a server that takes requests to make new users. The request needs a user name and a user ID, so we make a struct called NewUserRequest that has these fields. As long as these fields are in the request, it will deserialize correctly, and our NewUserRequest will work. To do this, we use serde_json’s from_str() method:

use serde::{Deserialize, Serialize};

use serde_json;

#[derive(Debug, Serialize, Deserialize)]

struct User {

name: String,

id: u32,

is_deleted: bool,

}

#[derive(Debug, Serialize, Deserialize)]

struct NewUserRequest {

name: String,

id: u32,

}

impl From<NewUserRequest> for User {

fn from(request: NewUserRequest) -> Self {

Self {

name: request.name,

id: request.id,

is_deleted: false,

}

}

}

fn handle_request(json_request: &str) {

match serde_json::from_str::<NewUserRequest>(json_request) {

Ok(good_request) => {

let new_user = User::from(good_request);

println!("Made a new user! {new_user:#?}");

println!(

"Serialized back into JSON: {:#?}",

serde_json::to_string(&new_user)

);

}

Err(e) => {

println!("Got an error from {json_request}: {e}");

}

}

}

fn main() {

let good_json_request = r#"

{

"name": "BillyTheUser",

"id": 6876

}

"#;

let bad_json_request = r#"

{

"name": "BobbyTheUser",

"idd": "6877"

}

"#;

handle_request(good_json_request);

handle_request(bad_json_request);

}

Made a new user! User {

name: "BillyTheUser",

id: 6876,

is_deleted: false,

}

Serialized back into JSON: Ok(

"{\"name\":\"BillyTheUser\",\"id\":6876,\"is_deleted\":false}",

)

Got an error from

{

"name": "BobbyTheUser",

"idd": "6877"

}

: missing field `id` at line 5 column 5

And because User implements Serialize, it could then be turned back into JSON if we needed to send it somewhere else in that format.

Serde has a lot of customizations depending on how you want to serialize or deserialize a type. For example, if you have an enum that you need to be in all capitals when serialized, you can stick this on top: #[serde(rename_all = "SCREAMING_SNAKE_ CASE")], and serde will do the rest. The serde documentation has information on these attributes (https://serde.rs/container-attrs.html).

The next crate we are going to look at is called chrono (https://crates.io/crates/chrono), which is the main crate for those who need functionality for time, such as formatting dates, setting time zones, and so on. But you might be wondering: Why not just use the time module in the standard library? The answer is simple: std::time is minimal, and there isn’t much you can do with std::time alone. It does have some useful types, though, so we will start with this module before we move on to chrono.

The simplest way to start with the time module is by getting a snapshot of the present moment with Instant::now(). This returns an Instant that we can print out:

use std::time::Instant;

fn main() {

let time = Instant::now();

println!("{:?}", time);

}

However, the output of an Instant is maybe a bit surprising. On the Playground, it will look something like this:

Instant { tv_sec: 949256, tv_nsec: 824417508 }

If we do a quick calculation, 949,256 seconds is just under 11 days. There is a reason for this: an Instant shows the time since the system booted up, not the time since a set date. Obviously, this won’t be able to help us know today’s date, or the month or the year. The page on Instant tells us that it isn’t supposed to be useful on its own, describing it as

Opaque and useful only with Duration.

The page explains that Instant is “often useful for tasks such as measuring benchmarks or timing how long an operation takes.” And a Duration, as you might guess, is a struct that is used to show how much time has passed.

We can see how Instant and Duration work together if we look at the traits implemented for Instant. For example, one of them is Sub<Instant>, which lets us use the minus symbol to subtract one Instant from another. Let’s click on the [src] button to see the source code. It’s not too complicated:

impl Sub<Instant> for Instant {

type Output = Duration;

fn sub(self, other: Instant) -> Duration {

self.duration_since(other)

}

}

It looks like Instant has a method called .duration_since() that produces a Duration. Let’s try subtracting one Instant from another to see what we get. We’ll make two of them by using the Instant::now() function twice, and then we’ll make the program busy for a while. Then we’ll make one more Instant::now(). Finally, we’ll see how long it took:

use std::time::Instant;

fn main() {

let start_of_main = Instant::now();

let before_operation = Instant::now(); ①

let mut new_string = String::new();

loop {

new_string.push('წ'); ②

if new_string.len() > 100_000 {

break;

}

}

let after_operation = Instant::now(); ③

println!("{:?}", before_operation - start_of_main);

println!("{:?}", after_operation - start_of_main);

}

① Nothing happened between these two variables, so the Duration should be extremely small.

② Now we’ll give the program some busy work. It has to push this Georgian letter onto new_string until it is 100000 bytes in length.

③ Then we’ll make a new Instant after the busy work is done and see how long everything took.

This code will print something like this:

1.025µs 683.378µs

So that’s just over 1 microsecond versus 683 microseconds. Subtracting one Instant from another Instant shows us that the program did indeed take some time to work on the task that we gave it.

There is also a method called .elapsed() that lets us do the same thing without creating a new Instant every time. The following code gives the same output as the previous example, except that it just calls .elapsed() to see how much time has gone by since the first Instant:

use std::time::Instant;

fn main() {

let start = Instant::now();

println!("Time elapsed before busy operation: {:?}", start.elapsed());

let mut new_string = String::new();

loop {

new_string.push('წ');

if new_string.len() > 100_000 {

break;

}

}

println!("Operation complete. Time elapsed: {:?}", start.elapsed());

}

The output is the same as before.

By the way, the Opaque and useful only with Duration comment feels like a challenge. Surely we can find some use for this. Let’s have some fun by implementing a really bad random number generator. We saw that an Instant when printed using Debug has a lot of numbers that are different each time. We can use .chars() to turn this into an iterator and.rev() to reverse it and then filter out chars that aren’t digits. Instead of .parse(), we can use a convenient .to_digit() method that char has that returns an Option. The code looks like this:

use std::time::Instant;

fn bad_random_number(digits: usize) {

if digits > 9 {

panic!("Random number can only be up to 9 digits");

}

let now_as_string = format!("{:?}", Instant::now());

now_as_string

.chars()

.rev()

.filter_map(|c| c.to_digit(10)) ①

.take(digits)

.for_each(|character| print!("{}", character));

println!();

}

fn main() {

bad_random_number(1);

bad_random_number(1);

bad_random_number(3);

bad_random_number(3);

}

① The .to_digit() method here is taking a 10 because we want a decimal number (0–9). We could have used .to_digit(2) for binary, .to_digit(16) for hexadecimal, and so on.

The code will print something like the following:

6 4 967 180

The function is called bad_random_number() for a good reason. For example, if we choose to print nine digits, the final numbers won’t be very random anymore:

855482162 155882162 688592162

That’s because after a few digits we have printed out all the nanoseconds and are now printing out the seconds, which will not change much during this short code sample. So definitely stick with crates like rand and fastrand.

The time module has two more items to note: a struct called SystemTime and a const called UNIX_EPOCH, the Unix epoch representing midnight on the 1st of January, 1970. The SystemTime struct can be used to get the current date or at least the number of seconds that have passed since 1970. The page on SystemTime (https://doc.rust-lang.org/std/time/struct.SystemTime.html) has a nice clear explanation of what makes it different from Instant, so let’s just read what is written there:

A measurement of the system clock, useful for talking to external entities like the file system or other processes.

Distinct from the Instant type, this time measurement is not monotonic. This means that you can save a file to the file system, then save another file to the file system, and the second file has a SystemTime measurement earlier than the first. In other words, an operation that happens after another operation in real time may have an earlier SystemTime!

Knowing this, let’s do a quick comparison of the two by printing each one out:

use std::time::{Instant, SystemTime};

fn main() {

let instant = Instant::now();

let system_time = SystemTime::now();

println!("{instant:?}");

println!("{system_time:?}");

}

The output will look something like this:

Instant { tv_sec: 956710, tv_nsec: 22275264 }

SystemTime { tv_sec: 1676778839, tv_nsec: 183795450 }

And if we do the math, 95,6710 seconds (from Instant) turns out to be about 11 days. But 1,676,778,839 seconds (from SystemTime) turns out to be a bit over 53 years, which is exactly how much time has passed since 1970 when this code was run.

For a more readable output, we can use .duration_since() and put UNIX_EPOCH inside:

use std::time::{SystemTime, UNIX_EPOCH};

fn main() {

println!("{:?}", SystemTime::now().duration_since(UNIX_EPOCH).unwrap());

}

This will print something like 1676779741.912581202s. And that’s pretty much all the standard library has for printing out dates. It doesn’t have anything to turn 1,676,779,741 seconds into a human-readable date, apply a time zone, or anything like that.

We have one last item in std::time before moving on to chrono: putting threads to sleep by passing in a Duration. Inside a thread, you can use std::thread::sleep() to make the thread stop for a while. If you aren’t using multiple threads, this function will make the entire program sleep, as there are no other threads to do anything while the main thread is asleep. To use this function, you have to give it a Duration. Creating a Duration is fairly simple: pick the method that matches the unit of time you want to use and give it a number. Duration::from_millis() is used to stop for a number of milliseconds, Duration::from_secs() for seconds, and so on. Here’s one example:

use std::time::Duration;

use std::thread::sleep;

fn main() {

let three_seconds = Duration::from_secs(3);

println!("I must sleep now.");

sleep(three_seconds);

println!("Did I miss anything?");

}

The output is just the first line followed by the second line 3 seconds later:

I must sleep now. Did I miss anything?

Enough waiting, let’s move on to chrono!

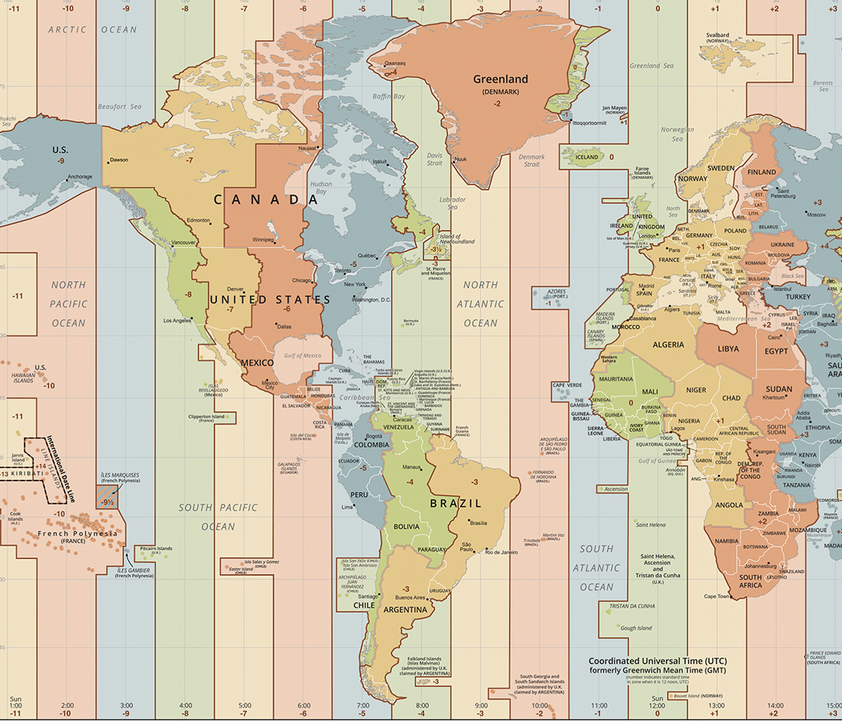

Time is a pretty complex subject, thanks to a combination of astronomy and history. Astronomically, time basically has to do with measuring the rotation of the Earth around the Sun, the spinning of the Earth around itself, and cutting the Earth into time zones so that everyone can have a similar idea when they see the time on the clock (figure 17.1). With this, 12 pm means noon when the Sun is high (well, usually), 6 am is early morning, 12 am is midnight when the day changes, and so on, no matter where you are on the planet.

Historically, time is just as complex. We have a lot of different calendars. One year has 365 days, one day has 24 hours (thanks to the Egyptians), and months have different lengths (thanks to the Roman emperors), and we count using 60 instead of 100 (thanks to the Sumerians). Plus, we have leap years and even leap seconds! It’s probably not surprising that the types inside the chrono crate can be complex, too.

But there are some fairly simple types inside chrono, which start with Naive: NaiveDate, NaiveDateTime, and so on. Naive here means that they don’t have any time zone info. The easiest way to create them is with the methods that start with from_ and end with _opt. A quick example will be the easiest way to demonstrate this:

use chrono::naive::{NaiveDate, NaiveTime};

fn main() {

println!("{:?}", NaiveDate::from_ymd_opt(2023, 3, 25));

println!("{:?}", NaiveTime::from_hms_opt(12, 5, 30));

println!("{:?}", NaiveDate::from_ymd_opt(2023, 3, 25).unwrap().and_hms_opt(12, 5, 30));

}

Some(2023-03-25) Some(12:05:30) Some(2023-03-25T12:05:30)

Here, ymd stands for “year month day,” and hms stands for “hour minutes seconds.” The first println! shows an Option<NaiveDate>; the second, an Option<NaiveTime>; and the third, an Option<NaiveDateTime>. The .and_hms_opt() method turns a NaiveDate into a NaiveDateTime by giving it the hour, minutes, and seconds needed to know the time of day.

You might be wondering: Why do all these methods have an _opt at the end? This brings us to an interesting discussion. Let’s change the subject just a little bit.

The simple answer to the previous question is that the _opt at the end of these methods is because they return an Option. But then again, none of the other methods we have seen in this book that return an Option have an _opt at the end. Why are these method names so long?

It’s an interesting story. If you take a look at the history of the chrono crate, you can see a change made as recently as November 2022 to deprecate the methods without _opt, because inside there was a chance that they could panic. For example, the from_ymd() method simply calls from_ymd_opt() with .expect(), and “panics on out-of-range date, invalid month and/or day”:

/// Makes a new `NaiveDate` from the [calendar date](#calendar-date)

/// (year, month and day).

///

/// Panics on the out-of-range date, invalid month and/or day.

#[deprecated(since = "0.4.23", note = "use `from_ymd_opt()` instead")]

pub fn from_ymd(year: i32, month: u32, day: u32) -> NaiveDate {

NaiveDate::from_ymd_opt(year, month, day).expect("invalid or out-

➥of-range date")

}

Perhaps too many people were using methods like .from_ymd() without reading the note on possible panics, and the crate authors decided to make it clear that the method could fail.

In fact, the chrono crate is planning to change these methods again (https://github.com/chronotope/chrono/issues/970) to return a Result instead of an Option with a different name that begins with try_, such as try_from_ymd(). So, by the time you read this book, the chrono crate might have changed the methods a little bit.

In any case, the small lesson here is that you should click on the source for methods you use in other crates and do a quick check for possible panics. Sometimes crate authors decide that a small chance of panic is worth it in exchange for extra convenience or if it makes sense to panic. For example, every thread made by the thread::spawn() method in the standard library is given a certain amount of memory to use, and the program will panic if the operating system is unable to create a thread, which usually comes from running out of memory. (The documentation for spawn() mentions this possibility, by the way.)

We can give this a try ourselves! Let’s spawn 100,000 threads and see whether the Playground runs out of memory:

fn main() {

for _ in 0..100000 {

std::thread::spawn(|| {});

}

}

thread 'main' panicked at 'failed to spawn thread: Os { code: 11, kind:

➥WouldBlock, message: "Resource temporarily unavailable" }',

In any case, it was probably a good idea for the authors of chrono to make it clearer to users that these methods may fail. Now, let’s return to the crate again.

We will finish up our quick look at the chrono crate with an example that shows the following:

Using SystemTime and the UNIX_EPOCH const to get the number of seconds since 1970

Using a NaiveDateTime that uses these seconds to display the date and time without a time zone

Creating a FixedOffset to create a time zone that differs from Utc

This is generally the sort of tinkering you will do when working with chrono. Here is the code:

use std::time::SystemTime;

use chrono::{DateTime, FixedOffset, NaiveDateTime, Utc};

fn main() {

let now = SystemTime::now().duration_since(SystemTime::

➥UNIX_EPOCH).unwrap(); ①

let seconds = now.as_secs(); ②

println!("Seconds from 1970 to today: {seconds}");

let naive_dt = NaiveDateTime::from_timestamp_opt

➥(seconds as i64, 0).unwrap(); ③

println!("As NaiveDateTime: {naive_dt}");

let utc_dt = DateTime::<Utc>::from_utc(naive_dt, Utc); ④

println!("As DateTime<Utc>: {utc_dt}");

let kyiv_offset = FixedOffset::east_opt(3 * 60 * 60)

➥.unwrap(); ⑤

let kyiv_dt: DateTime::<FixedOffset> = DateTime::from_utc(naive_dt,

➥kyiv_offset); ⑥

println!("In a timezone 3 hours from UTC: {kyiv_dt}");

let kyiv_naive_dt = kyiv_dt.naive_local(); ⑦

println!("With timezone information removed: {kyiv_naive_dt}");

}

① We learned to use SystemTime and .duration_since() with the UNIX_EPOCH just above, and this will give us a Duration to work with.

② To construct a NaiveDateTime, we need to give it seconds and nanoseconds. We could also use .as_nanos() to get the nanoseconds in the Duration, but we don’t care about being that exact.

③ The .as_secs() method gave us a u64. NaiveDateTime::from_timestamp_opt() takes an i64, and we’re pretty sure that we are living after 1970 when the Unix epoch began, so the number won’t be negative.

④ You can make a time zone-aware DateTime from NaiveDateTime if you give it a time zone. The Utc time zone is its own type in chrono, so we can just stick it in.

⑤ For other time zones, we have to make an offset. Kyiv is three hours east of Utc, which is 3 hours * 60 minutes per hour * 60 seconds per minute.

⑥ Then we can construct a DateTime in basically the same way as the Utc DateTime above.

⑦ And we can turn it back into a NaiveDateTime, removing the time zone information.

The output will look something like this:

Seconds from 1970 to today: 1683253399 As NaiveDateTime: 2023-05-05 02:23:19 As DateTime<Utc>: 2023-05-05 02:23:19 UTC In a timezone 3 hours from UTC: 2023-05-05 05:23:19 +03:00 With timezone information removed: 2023-05-05 05:23:19

That should give us some idea of how to work with time using chrono. It requires reading the documentation thoroughly and finding the right way to convert from one type into another.

For our final example, let’s think of something a bit closer to what we might build ourselves. The following code imagines that we are working with a service that receives events with a UTC timestamp and some data. We then need to turn these timestamps into the Korea/Japan time zone (9 hours ahead of UTC) and make them into a KoreaJapanUserEvent struct. This time, we’ll also create two small tests to confirm that the data is what we expect it to be:

use chrono::{DateTime, FixedOffset, Utc};

use std::str::FromStr; ①

const SECONDS_IN_HOUR: i32 = 3600; ②

const UTC_TO_KST_HOURS: i32 = 9;

const UTC_TO_KST_SECONDS: i32 = UTC_TO_KST_HOURS * SECONDS_IN_HOUR;

#[derive(Debug)] ③

struct UtcUserEvent {

timestamp: &'static str,

data: String,

}

#[derive(Debug)] ④

struct KoreaJapanUserEvent {

timestamp: DateTime<FixedOffset>,

data: String,

}

impl From<UtcUserEvent> for KoreaJapanUserEvent { ⑤

fn from(event: UtcUserEvent) -> Self {

let utc_datetime: DateTime<Utc> =

➥DateTime::from_str(event.timestamp).unwrap();

let offset = FixedOffset::east_opt(UTC_TO_KST_SECONDS).unwrap();

let timestamp: DateTime<FixedOffset> =

➥DateTime::from_utc(utc_datetime.naive_utc(), offset);

Self {

timestamp,

data: event.data,

}

}

}

fn main() {

let incoming_event = UtcUserEvent {

timestamp: "2023-03-27 23:48:50 UTC",

data: "Something happened in UTC time".to_string(),

};

println!("Event as Utc:\n{incoming_event:?}");

let korea_japan_event = KoreaJapanUserEvent::from(incoming_event);

println!("Event in Korea/Japan time:\n{korea_japan_event:?}");

}

#[test] ⑥

fn utc_to_korea_output_same_evening() {

let morning_event = UtcUserEvent {

timestamp: "2023-03-27 09:48:50 UTC",

data: String::new(),

};

let to_korea_japan = KoreaJapanUserEvent::from(morning_event);

assert_eq!(

&to_korea_japan.timestamp.to_string(),

"2023-03-27 18:48:50 +09:00"

);

}

#[test] ⑥

fn utc_to_korea_output_next_morning() {

let evening_event = UtcUserEvent {

timestamp: "2023-03-27 23:59:59 UTC",

data: String::new(),

};

let korea_japan_next_morning = KoreaJapanUserEvent::from(evening_event);

assert_eq!(

&korea_japan_next_morning.timestamp.to_string(),

"2023-03-28 08:59:59 +09:00"

);

}

① This use statement lets us use the DateTime::from_str() method. You’ll learn how this works in the section on blanket trait implementations just below.

② Nine hours is 32,400 seconds. We could write 32,400, but having const values makes the code easy for others to follow.

③ This UtcUserEvent struct represents data that we get from outside the service.

④ This KoreaJapanUserEvent is what we want to turn the UtcUserEvent into.

⑤ We construct a DateTime<Utc>, bring in the Offset to change the time zone, and make a DateTime<FixedOffset> out of it.

⑥ Finally, two short tests with one assertion in each. This is a nice way to show expected behavior to people reading your code without needing to print things out in main().

Here is the output for this final example:

Event as Utc:

UtcUserEvent { timestamp: "2023-03-27 23:48:50 UTC", data: "Something

➥happened in UTC time" }

Event in Korea/Japan time:

KoreaJapanUserEvent { timestamp: 2023-03-28T08:48:50+09:00, data:

➥"Something happened in UTC time" }

That should be enough to get you started on using chrono to work with time. To finish things off, here are two other crates to take a look at:

The time crate (https://docs.rs/time/latest/time/), which is similar to chrono but smaller and simpler. Both chrono and time are on the list of Rust’s “blessed” crates.

chrono_tz (https://docs.rs/chrono-tz/latest/chrono_tz/), which makes working with time zones in chrono much easier.

The next crate, Rayon, also has something to do with time: it’s about reducing the time it takes for your code to run! Let’s take a look at how it works.

Rayon is a popular crate that lets you speed up your Rust code by automatically spawning multiple threads when working with iterators and related types. Instead of using thread::spawn() to spawn threads, you can just add par_ to the iterator methods you already know.

For example, Rayon has .par_iter() for .iter(), while the methods .iter_mut(), .into_iter(), and .chars() in Rayon are simply .par_iter_mut(), .par_into_ iter(), and .par_chars(). (You can probably imagine that par means parallel because it uses threads working in parallel.)

Here is an example of a simple piece of code that might be making the computer do a lot of work:

fn main() {

let mut my_vec = vec![0; 2_000_000];

my_vec

.iter_mut()

.enumerate()

.for_each(|(index, number)| *number += index + 1);

println!("{:?}", &my_vec[5000..5005]);

}

It creates a vector with 2,000,000 items: each one is 0. Then it calls .enumerate() to get the index for each number and changes the 0 to the index number plus 1. It’s too long to print, so we only print items from index 5000 to 5004 (the output is [5001, 5002, 5003, 5004, 5005]). To potentially speed this up with Rayon, you can write almost the same code:

use rayon::prelude::*; ① fn main() { let mut my_vec = vec![0; 2_000_000]; my_vec .par_iter_mut() .enumerate() .for_each(|(index, number)| *number += index + 1); ② }

And that’s it! Rayon has many other methods to customize what you want to do, but at its simplest, it is “add _par to make your program faster.”

But how much faster? And why did we say the first code sample might be a lot of work for a computer and that Rayon can potentially speed it up?

We can do a simple test to see how much faster Rayon is. First, we will use a method inside the std::thread module called available_parallelism() to see how many threads will be spawned. Rayon uses a method similar to this to decide on the best number of threads. Then we will create an Instant, change the Vec as in the previous example, and then use .elapsed() to see how much time went by. We will do this 10 times and stick the result in microseconds each time into a Vec, and then print out the average at the end:

use rayon::prelude::*;

use std::thread::available_parallelism;

fn main() {

println!(

"Estimated parallelism on this computer: {:?}",

available_parallelism()

);

let mut without_rayon = vec![]; ①

let mut with_rayon = vec![];

for _ in 0..10 {

let mut my_vec = vec![0; 2_000_000];

let now = std::time::Instant::now();

my_vec.iter_mut().enumerate().for_each(|(index, number)| {

*number += index + 1;

*number -= index + 1;

});

let elapsed = now.elapsed();

without_rayon.push(elapsed.as_micros()); ②

let mut my_vec = vec![0; 2_000_000];

let now = std::time::Instant::now();

my_vec

.par_iter_mut()

.enumerate()

.for_each(|(index, number)| {

*number += index + 1;

*number -= index + 1;

});

let elapsed = now.elapsed();

with_rayon.push(elapsed.as_micros());

}

println!(

"Average time without rayon: {} microseconds",

without_rayon.into_iter().sum::<u128>() / 10

);

println!(

"Average time with rayon: {} microseconds",

with_rayon.into_iter().sum::<u128>() / 10

);

}

① Inside these Vecs, we will push the time that elapsed during each test.

② There are other methods too like .as_nanos() and .as_millis(). Microseconds should be precise enough for us.

The speedup that Rayon gives will depend a lot on your code and the number of threads on your computer. This is quite clear when using the Playground, where the available parallelism is only 2. Surprisingly, the output will usually show only a moderate benefit:

Estimated parallelism on this computer: Ok(2) Average time without rayon: 64570 microseconds Average time with rayon: 56822 microseconds

And using Rayon will sometimes be slower in this case. On my computer, however, and probably on your computer, Rayon will use more threads and thus will show a much larger improvement. Here is one output from my computer:

Estimated parallelism on this computer: Ok(12) Average time without rayon: 27633 microseconds Average time with rayon: 9661 microseconds

And here is another surprise: if you click on Debug in the Playground and change it to Release, the code will take longer to compile but will run faster. In this case, Rayon is incredibly slow in comparison:

Estimated parallelism on this computer: Ok(2) Average time without rayon: 0 microseconds Average time with rayon: 87 microseconds

In fact, the code without Rayon is so fast that we would need to use the .as_nanos() method instead of .as_micros() even to see how long it took. Then, it will produce an output similar to this:

Estimated parallelism on this computer: Ok(2) Average time without rayon: 74 microseconds Average time with rayon: 113832 microseconds

That is a huge slowdown! This is because during release mode, the compiler tries to compute the result of methods ahead of time—especially for one as simple as this where we are just changing a few numbers. In effect, it just generates code to return the result without calculating anything at run time. (This is called optimization, and we will learn more about it in the next chapter.) But the Rayon code involves a lot of threading that makes the code more complex. That means that the compiler isn’t able to know the result until the code runs, so it ends up being slower. In short, Rayon might speed up your code. But be sure to check!

These two crates are used to help you with error handling. They are actually both made by the same person (David Tolnay) and are somewhat different.

Let’s imagine why someone might use these crates. Much of the time, Rust code is written in the following way:

A developer starts writing some code and uses .unwrap() or .expect() everywhere. This is fine at the beginning because you want to compile now and think about errors later. And it doesn’t matter if the program panics at this point—nobody else is using it.

The code starts to work, and you want to start handling errors properly. But it would be nice to have a single error type that’s easy to use. This is what Anyhow is used for. (A Box<dyn Error> is another common way to do this, as we have seen.)

Maybe later on, you decide you want your own error types, but implementing them manually is a lot of typing, and you want something more ergonomic. This is what thiserror is used for (although a lot of people just stick with Anyhow if it gets the job done).

Let’s think about a quick example where we might want to deal with multiple errors. This code won’t compile yet, but you can see the idea. We would like to take a slice of bytes and turn it into a &str. Then we’ll try to parse it into an i32. After that, we’ll send it to another function of ours that will send an Ok if the number is under 1 million. So, that’s three types of errors that could happen.

Also note that we are using std::io::Error as the return type in one of our functions. That error type is a fairly convenient one because it has an ErrorKind enum inside it with a huge number of variants. However, in this code sample, we are trying to use the question mark operator for methods that may return a different of error kind, so we can’t choose std::io::Error as a return value:

use std::io::{Error, ErrorKind};

fn parse_then_send(input: &[u8]) { ①

let some_str = std::str::from_utf8(input)?;

let number = some_str.parse::<i32>()?;

send_number(number)?;

}

fn send_number(number: i32) -> Result<(), Error> {

if number < 1_000_000 {

println!("Number sent!");

Ok(())

} else {

Err(Error::new(ErrorKind::InvalidData))

}

}

fn main() {}

This is where Anyhow comes in handy. Let’s see what Anyhow’s documentation says:

Use Result<T, anyhow::Error>, or equivalently anyhow::Result<T>, as the

➥return type of any fallible function.

Looks good. We can also bring in the anyhow! macro, which makes a quick anyhow::Error from a string or an error type. Let’s give it a try:

use anyhow::{anyhow, Error}; ①

fn parse_then_send(input: &[u8]) -> Result<(), Error> {

let some_str = std::str::from_utf8(input)?;

let number = some_str.parse::<i32>()?;

send_number(number)?;

Ok(())

}

fn send_number(number: i32) -> Result<(), Error> {

if number < 1_000_000 {

println!("Number sent!");

Ok(())

} else {

println!("Too large!");

Err(anyhow!("Number is too large"))

}

}

fn main() {

println!("{:?}", parse_then_send(b"nine"));

println!("{:?}", parse_then_send(b"10"));

}

① Error now means Anyhow’s Error type, not std::io::Error as in the previous example. We could also write `use anyhow::Error as AnyhowError` to give it a different name if we wanted.

Nice! Now anyhow’s Error is our single error type. The code gives this output:

Err(invalid digit found in string) Number sent! Ok(())

That’s not too bad. Note, though, that the first error is a little vague. Anyhow has a number of methods for its Error type that can help here, but a particularly easy one is .with_context(), which takes something that implements Display. You can use that to add some extra info. Let’s add some context:

use anyhow::{anyhow, Context, Error};

fn parse_then_send(input: &[u8]) -> Result<(), Error> {

let some_str = std::str::from_utf8(input)

.with_context(|| "Couldn't parse into a str")?;

let number = some_str

.parse::<i32>()

.with_context(|| format!("Got a weird str to parse: {some_str}"))?;

send_number(number)?;

Ok(())

}

fn send_number(number: i32) -> Result<(), Error> {

if number < 1_000_000 {

println!("Number sent!");

Ok(())

} else {

println!("Too large!");

Err(anyhow!("Number is too large"))

}

}

fn main() {

println!("{:?}", parse_then_send(b"nine"));

println!("{:?}", parse_then_send(b"10"));

}

Now the output is more helpful:

Err(Got a weird str to parse: nine

Caused by:

invalid digit found in string)

Number sent!

Ok(())

So that’s Anyhow. One thing Anyhow isn’t, however, is an actual error type (a type that implements std::error::Error). Anyhow (https://docs.rs/anyhow/latest/anyhow/) suggests using thiserror if we want an actual error type:

Anyhow works with any error type that has an impl of std::error::Error, including ones defined in your crate. We do not bundle a derive(Error) macro but you can write the impls yourself or use a standalone macro like thiserror.

So let’s look at that crate now.

The main convenience in thiserror is a derive macro called thiserror::Error that will quickly turn your type into one that implements std::error::Error. If we imagine that we want to make our code into a library and have a proper error type, we could use thiserror to do this. In this small example we have three possible errors, so let’s make an enum:

enum SystemError {

StrFromUtf8Error,

ParseI32Error,

SendError

}

Now we’ll use thiserror to turn it into a proper error type. You use #[derive(Error)] on top and then another #[error] attribute above each variant if we want a message. This will automatically implement Display. Note that if you print using Debug, you won’t see these extra messages.

You can also use another attribute called #[from] to automatically implement From for other error types. A type created from thiserror usually ends up looking something like this:

#[derive(Error, Debug)] ① enum SystemError { #[error("Couldn't send: {0}")] ② SendError(String), #[error("Couldn't parse into a str: {0}")] ③ StringFromUtf8Error(#[from] Utf8Error), #[error("Couldn't turn into an i32: {0}")] ParseI32Error(#[from] ParseIntError), #[error("Wrong color: Red {0} Green {1} Blue {2}")] ④ ColorError(u8, u8, u8), #[error("Something happened")] OtherError, }

① This here is thiserror’s Error macro. Easy to miss!

② First is a variant unrelated to any other external error types. The zero in these attribute macros means .0 used when accessing a tuple.

③ These next two will hold the information from the Utf8Error and ParseIntError types in the standard library, so we will use #[from].

④ We’ll throw in a ColorError while we’re at it to really make it clear that we are accessing the inner value in the same way we access any other tuple.

You can see that the error attribute has the same format as when you use the format! macro.

Now let’s look at almost the same example we used previously with thiserror instead of Anyhow:

use std::{num::ParseIntError, str::Utf8Error};

use thiserror::Error;

#[derive(Error, Debug)]

enum SystemError {

#[error("Couldn't send: {0}")]

SendError(String),

#[error("Couldn't parse into a str: {0}")]

StringFromUtf8Error(#[from] Utf8Error),

#[error("Couldn't turn into an i32: {0}")]

ParseI32Error(#[from] ParseIntError),

#[error("Wrong color: Red {0} Green {1} Blue {2}")]

ColorError(u8, u8, u8),

#[error("Something happened")]

OtherError,

}

fn parse_then_send(input: &[u8]) -> Result<(), SystemError> {

let some_str = std::str::from_utf8(input)?; ①

let number = some_str.parse::<i32>()?;

send_number(number)?;

Ok(())

}

fn send_number(number: i32) -> Result<(), SystemError> {

match number {

num if num == 500 => Err(SystemError::OtherError), ②

num if num > 1_000_000 => Err(SystemError::SendError(format!(

"{num} is too large, can't send!"

))),

_ => {

println!("Number sent!");

Ok(())

}

}

}

fn main() {

println!("{}", parse_then_send(b"nine").unwrap_err()); ③

println!("{}", parse_then_send(&[8, 9, 0, 200]).unwrap_err());

println!("{}", parse_then_send(b"109080098").unwrap_err());

println!("{}", SystemError::ColorError(8, 10, 200));

parse_then_send(b"10098").unwrap();

}

① Having a From impl makes the code pretty nice here—just use the question mark operator.

② This is just an excuse to use the OtherError variant. 500 is a bad number for some reason.

③ The .unwrap_err() method is like .unwrap() except it panics upon receiving an Ok instead of when receiving an Err. It’s a quick way to get to the error type inside.

Couldn't turn into an i32: invalid digit found in string Couldn't parse into a str: incomplete utf-8 byte sequence from index 3 Couldn't send: 109080098 is too large, can't send! Wrong color: Red 8 Green 10 Blue 200 Number sent!

Pretty slick! With not too many lines of code, we have a proper error enum with all the info we need.

So thiserror lets us implement From for certain other error types to bring into our error enum. What if we wanted to make a variant that implements From for all types that implement std::error::Error? Let’s take a small detour and talk about blanket trait implementations.

A blanket trait implementation lets you implement your trait for other people’s types. Usually, it’s used for every type that implements certain other traits, but you can also implement it on any and all other types if you want.

Let’s start by making a trait that says “Hello”:

trait SaysHello {

fn hello(&self) {

println!("Hello");

}

}

It would be nice to let every other type in the world have this trait. How do we do that? Pretty easy—just give it to a generic type T:

trait SaysHello {

fn hello(&self) {

println!("Hello");

}

}

impl<T> SaysHello for T {}

This generic type T doesn’t have any bounds like Display or Debug, so every Rust type in the whole world counts as a type T. And now every type in our code can call .hello(). Let’s give it a try! Now every type everywhere implements SaysHello:

trait SaysHello {

fn hello(&self) {

println!("Hello");

}

}

impl<T> SaysHello for T {}

struct Nothing;

fn main() {

8.hello();

&'c'.hello();

&mut String::from("Hello there").hello();

8.7897.hello();

Nothing.hello();

std::collections::HashMap::<i32, i32>::new().hello();

}

Now, usually, a blanket trait implementation is implemented for a certain type with a trait of its own, such as <T: Debug>. We are quite familiar with this already: with a Debug trait bound, we know that the type will implement Debug and thus can be printed with {:?}, used as a function parameter that needs Debug, and so on.

In our case, we can make a trait and implement it for anything that implements std::error::Error. We can then use a blanket implementation on anything that implements Error and, if it does, to put it into a variant of our enum. This lets us have our own proper error type while keeping a place to put all the possible errors from external crates. Here’s what it could look like:

use std::error::Error as StdError; ① use thiserror::Error; #[derive(Error, Debug)] enum SystemError { #[error("Couldn't send: {0}")] SendError(String), #[error("External crate error: {0}")] ExternalCrateError(String), ② } trait ToSystemError<T> { fn to_system_error(self) -> Result<T, SystemError>; } impl<T, E: StdError> ToSystemError<T> for Result<T, E> { ③ fn to_system_error(self) -> Result<T, SystemError> { self.map_err(|e| SystemError::ExternalCrateError(e.to_string())) } }

① Gives Error (in the standard library) and Error (in the thiserror crate) different names

② This variant will hold all the external errors.

③ This function will turn a Result<T, E> to a Result <T, SystemError>. Anything with std::error::Error will implement Display, so we can call .to_string() and put it inside the ExternalCrateError variant.

This uses a blanket implementation for anything that is an Error type, turns it into a String, and sticks it into a variant called ExternalCrateError. With this trait, you can then just type .to_system_error()? every time you have code from another source that you want to put into the SystemError enum. Then it looks like this:

use std::error::Error as StdError;

use thiserror::Error;

#[derive(Error, Debug)]

enum SystemError {

#[error("Couldn't send {0}")]

SendError(i32),

#[error("External crate error: {0}")]

ExternalCrateError(String),

}

trait ToSystemError<T> {

fn to_system_error(self) -> Result<T, SystemError>;

}

impl<T, E: StdError> ToSystemError<T> for Result<T, E> {

fn to_system_error(self) -> Result<T, SystemError> {

self.map_err(|e| SystemError::ExternalCrateError(e.to_string()))

}

}

fn parse_then_send(input: &[u8]) -> Result<(), SystemError> {

let some_str = std::str::from_utf8(input).to_system_error()?;

let number = some_str.parse::<i32>().to_system_error()?;

send_number(number).to_system_error()?;

Ok(())

}

fn send_number(number: i32) -> Result<(), SystemError> {

if number < 1_000_000 {

println!("Number sent!");

Ok(())

} else {

println!("Too large!");

Err(SystemError::SendError(number))

}

}

fn main() {

println!("{}", parse_then_send(b"nine").unwrap_err()); ①

println!("{:?}", parse_then_send(b"nine"));

println!("{:?}", parse_then_send(b"10"));

}

① We are calling the function twice to compare the Display and Debug output.

External crate error: invalid digit found in string

Err(ExternalCrateError("invalid digit found in string"))

Number sent!

Ok(())

The standard library has a lot of other blanket implementations that you can find by looking for impl on generic types (usually T). Let’s take a look at a few.

This first one is familiar: with Display, we get the .to_string() method from the ToString trait for free:

impl<T> ToString for T where T: Display + ?Sized,

This next one is also familiar. If you implement From, you get Into for free:

impl<T, U> Into<U> for T

where

U: From<T>,

This next one is interesting. If you have From, you get Into for free, and if you have Into, you also get TryFrom for free:

impl<T, U> TryFrom<U> for T

where

U: Into<T>,

This also makes sense because it would be weird to have a function or parameter that requires a TryFrom<T> but refuses a type that implements From<T>!

Here is the simplest possible example showing these two blanket traits:

#[derive(Debug)]

struct One;

#[derive(Debug)]

struct Two;

impl From<One> for Two { ①

fn from(one: One) -> Self {

Two

}

}

fn main() {

let two: Two = One.into(); ②

let try_two = Two::try_from(One);

println!("{two:?}, {try_two:?}");

}

② Now we get both Into<Two> and TryFrom<One> for free!

There are tons and tons of blanket implementations for From. Let’s pick a fancy one. But if you read it slowly, you’ll be able to figure it out:

impl<K, V, const N: usize> From<[(K, V); N]> for BTreeMap<K, V>

where

K: Ord,

The implementation involves a K (a key) and a V (a value), which makes sense—a BTreeMap uses keys and values.

There is also a const N: usize. That’s const generics! We learned them just in the last chapter.

The [(K, V); N] signature means an array of length N (in other words, any length) that holds tuples of (K, V).

BTreeMaps order their contents, so their keys need to implement Ord.

So, it looks like this is a blanket implementation to construct a BTreeMap from an array of tuples of keys and values, where the keys can be ordered. Let’s try this out to see whether we can make a BTreeMap straight from an array:

use std::collections::BTreeMap;

fn main() {

let my_btree_map = BTreeMap::from([

("customer_1_money".to_string(), 10),

("customer_2_money".to_string(), 200),

]);

}

It works! The array here is of type [(String, i32]; 2].

It’s time to get back to external crates with our last two: lazy_static and OnceCell.

Remember the section in the last chapter on mutable static variables? We saw that starting in Rust 1.63 (summer 2022), this sort of expression became possible because all of these functions are const fns:

static GLOBAL_LOGGER: Mutex<Vec<Log>> = Mutex::new(Vec::new());

Before Rust 1.63, you needed either the crate lazy_static or the crate once_cell to do this.

However, there are still a lot of other static variables you might want to have but can’t initialize with a const fn, and that is what these two crates allow you to do. They are called lazily initiated statics, meaning that they are initiated at run time instead of compile time. lazy_static is the older and simpler crate, so we’ll look at it first.

Let’s imagine that our GLOBAL_LOGGER also wants to send data to a server somewhere else over HTTP. It would be nice to give it a Vec<Log> for the info, a String for the URL to send the requests to, and a Client that will post the data. So something like this would be good to start:

use reqwest::Client;

#[derive(Debug)]

struct Logger {

logs: Vec<Log>,

url: String,

client: Client,

}

#[derive(Debug)]

struct Log {

message: String,

timestamp: i64,

}

By the way, reqwest (note the spelling) is the next external crate that we will look at. For this code sample, we won’t do anything with it, but just remember that reqwest::Client is used for POST, GET, and all other HTTP actions.

But making it a static like this won’t work for us:

use reqwest::Client;

use std::sync::Mutex;

#[derive(Debug)]

struct Logger {

logs: Mutex<Vec<Log>>,

url: String,

client: Client,

}

#[derive(Debug)]

struct Log {

message: String,

timestamp: i64,

}

static GLOBAL_LOGGER: Logger = Logger {

logs: Mutex::new(vec![]),

url: "https://somethingsomething.com".to_string(),

client: Client::default()

};

fn main() {

}

The compiler lets us know that this Logger struct involves functions that aren’t const and thus can’t be called:

error[E0015]: cannot call non-const fn `<str as ToString>::to_string` in ➥statics error[E0015]: cannot call non-const fn `<reqwest::Client as ➥Default>::default` in statics

Even if we change the URL to a Mutex<String>, the Client itself is a non-const fn, so no luck there. And we might want to add more parameters to our Logger struct anyway.

This is where lazy_static comes in. It’s pretty easy. Here’s how its crate describes it (https://docs.rs/lazy_static/latest/lazy_static/):

Using this macro, it is possible to have statics that require code to be executed at runtime in order to be initialized. This includes anything requiring heap allocations, like vectors or hash maps, as well as anything that requires function calls to be computed.

To initiate a lazy static, you can use the lazy_static! and declare a static ref instead of a static. Note that static ref is a term only used by this crate: there’s nothing in Rust itself called a static ref. But it’s called a static ref because of the following:

For a given static ref NAME: TYPE = EXPR;, the macro generates a unique type that implements Deref<TYPE> and stores it in a static with name NAME. (Attributes end up attaching to this type.)

Cool. But we don’t even need to think about that if we don’t want to. Just make a lazy_static! block and put statics in there that we now call static ref instead of static. This part of the code is almost identical to before:

lazy_static! { ①

static ref GLOBAL_LOGGER: Logger = Logger { ②

logs: Mutex::new(vec![]),

url: "https://somethingsomething.com".to_string(),

client: Client::default()

};

}

① We call the lazy_static! macro.

② We call it a static ref instead of a static. Everything else is the same.

And with just those two changes, we have a static that can be called anywhere from the program. Here is what the code looks like now:

use lazy_static::lazy_static;

use reqwest::Client;

use std::sync::Mutex;

#[derive(Debug)]

struct Logger {

logs: Mutex<Vec<Log>>,

url: String,

client: Client,

}

#[derive(Debug)]

struct Log {

message: String,

timestamp: i64,

}

lazy_static! {

static ref GLOBAL_LOGGER: Logger = Logger {

logs: Mutex::new(vec![]),

url: "https://somethingsomething.com".to_string(),

client: Client::default()

};

}

fn main() {

GLOBAL_LOGGER.logs.lock().unwrap().push(Log {

message: "Everything's going well".to_string(),

timestamp: 1658930674

});

println!("{:#?}", *GLOBAL_LOGGER.logs.lock().unwrap());

}

So that’s lazy_static. The other one is called once_cell and is a bit harder to use but more flexible. OnceCell is also in the process of being added to the standard library, so it’s good to know. In fact, it might even be done by the time you read this book. As of June 2023, parts of the once_cell crate can be used in the standard library (http://mng.bz/mj84). Even after the rest of the functionality is ported to the standard library, we will probably see the once_cell crate in use in Rust code for many years to come.

As the name suggests, a OnceCell is a cell that is written to once. You start it off as a OnceCell::new() of some type (like a OnceCell<String> or OnceCell<Logger>) and then call .set() to initialize the type that it holds.

A OnceCell feels pretty similar to a Cell and has similar method names, too, such as .set() and .get().

So what makes a OnceCell more flexible than lazy_static? Here are some highlights:

A OnceCell can hold a whole type (like our whole Logger), or it can be a parameter inside another type.

You can use a OnceCell with variables that we don’t know until much later in the program. Maybe we don’t know our Logger’s URL yet and need to get it somewhere. We can start main(), get the URL much later, and then stick it inside our Logger using .set().

For a OnceCell, you can choose a sync or unsync version. If you don’t need to send it between threads, just choose unsync.

Let’s give OnceCell a try with the same Logger struct as before. We’ll make the same GLOBAL_LOGGER, but this time, it will be a OnceCell<Logger>. To start a OnceCell, just use OnceCell::new(). It looks like this:

static GLOBAL_LOGGER: OnceCell<Logger> = OnceCell::new();

This gives us an empty cell that is ready for us to call .set()to initialize the value inside. The whole thing looks like this:

use once_cell::sync::OnceCell;

use reqwest::Client;

use std::sync::Mutex;

#[derive(Debug)]

struct Logger {

logs: Mutex<Vec<Log>>,

url: String,

client: Client,

}

#[derive(Debug)]

struct Log {

message: String,

timestamp: i64,

}

static GLOBAL_LOGGER: OnceCell<Logger> = OnceCell::new();

fn fetch_url() -> String { ①

②

"http://somethingsomething.com".to_string() ③

}

fn main() {

let url = fetch_url();

GLOBAL_LOGGER ④

.set(Logger {

logs: Mutex::new(vec![]),

url,

client: Client::default(),

})

.unwrap(); ⑤

GLOBAL_LOGGER ⑥

.get() ⑦

.unwrap()

.logs

.lock() ⑧

.unwrap()

.push(Log {

message: "Everything's going well".to_string(),

timestamp: 1658930674,

});

println!("{GLOBAL_LOGGER:?}"); ⑨

}

① We’ll pretend that this function needs to do something at run time to find the url.

② Pretend that there is a lot of code here.

④ The program has started, and we got the URL. Now it’s time to set the GLOBAL_LOGGER by putting a Logger struct inside.

⑤ .set() returns a Result but will return an error if the cell has already been set.

⑥ GLOBAL_LOGGER is initialized. Let’s get a reference to it.

⑦ .get()returns a None if the cell hasn’t been set yet. We’ll unwrap here, too.

⑧ Finally, we are accessing .logs inside the Logger struct, which is a Mutex. The rest of the code involves locking the Mutex and pushing a message to the Vec<Log> that it holds.

This prints out everything inside our GLOBAL_LOGGER. It works! You can see the message inside it:

OnceCell(Logger { logs: Mutex { data: [Log { message: "Everything's going

➥well", timestamp: 1658930674 }], poisoned: false, .. }, url:

➥"http://somethingsomething.com", client: Client { accepts: Accepts,

➥proxies: [Proxy(System({}), None)], referer: true, default_headers:

➥{"accept": "*/*"} } })

And don’t worry about the porting of OnceCell to the standard library, as the code is almost exactly the same. Here is the same example as before except that we are using the standard library instead. The only difference here is that a std::cell::OnceCell in the standard library is not thread-safe, while the thread-safe version is called a std::sync::OnceLock. Other than that, though, the code is exactly the same!

use reqwest::Client;

use std::sync::Mutex;

use std::sync::OnceLock;

#[derive(Debug)]

struct Logger {

logs: Mutex<Vec<Log>>,

url: String,

client: Client,

}

#[derive(Debug)]

struct Log {

message: String,

timestamp: i64,

}

static GLOBAL_LOGGER: OnceLock<Logger> = OnceLock::new();

fn fetch_url() -> String {

"http://somethingsomething.com".to_string()

}

fn main() {

let url = fetch_url();

GLOBAL_LOGGER

.set(Logger {

logs: Mutex::new(vec![]),

url,

client: Client::default(),

})

.unwrap();

GLOBAL_LOGGER

.get()

.unwrap()

.logs

.lock()

.unwrap()

.push(Log {

message: "Everything's going well".to_string(),

timestamp: 1658930674,

});

println!("{GLOBAL_LOGGER:?}");

}

So that’s how OnceCell works. You must be curious about the reqwest crate by now, but we won’t see it in detail until chapter 19 because there are a few items to take care of first. One of them is installing Rust on your computer because the Rust Playground won’t let people use reqwest to make HTTP requests. (Who knows what people would use that for . . .)

This means that we’ve finally reached the part of the book that deals with Rust on your computer (although you’ve probably already installed Rust if you’ve read this far in the book). In the next chapter, we’ll go over the basics of using Rust on your computer: installing Rust, setting up a project using Cargo, using Cargo doc to automatically generate your documentation, and all the other nice things that come with using Cargo to set up a project and run your code instead of just the Playground.

External crates are used all the time in Rust, even for key functionality like dealing with time.

If you are doing a lot of heavy computing with iterators (and don’t feel like spawning extra threads yourself), try bringing in the rayon crate.

Anyhow is the most frequently used external crate for dealing with multiple errors, while thiserror can be used to easily create your own error types.

The lazy_static and once_cell crates are used for creating global variables that can’t be constructed at compile time.

The functionality of both lazy_static and once_cell are being ported to the standard library, so eventually you may not need to use any external crates at all to create any global variables.

Blanket implementations let you give trait methods to any types you want. These are used everywhere in the standard library, too, such as when you get the .to_string() method for free for anything that implements Display.